AI chip giant Nvidia’s blistering growth in recent years has sprung from the data center. On Monday night, CEO Jensen Huang made the case that Nvidia’s next wave of expansion will encompass much more — stretching from the warehouse floor to city streets and beyond. Huang’s slate of announcements and pronouncements on artificial intelligence at the annual CES conference in Las Vegas reinforce our conviction that Nvidia is an essential stock to own, even after back-to-back years of monster gains that turned the company into one of the world’s most valuable companies. The stock’s more than 5% pullback in Tuesday’s session strikes us as little more than a “sell the news” reaction. After all, shares closed at a record Monday. Huang offered up plenty of exciting new products, including a new video game chip that uses AI to improve graphics rendering and a model that can improve autonomous driving and robotics systems. However, the night was not just about Nvidia’s product roadmap. It was more about presenting a broader roadmap for artificial intelligence, as the industry matures from creative-minded generative AI chatbots — such as ChatGPT, which catalyzed this boom two-plus years ago — and into new frontiers like “agentic AI” and “physical AI.” Agentic AI refers to software applications that can autonomously complete multi-step tasks, while physical AI is defined by self-driving vehicles and general robotics. Of course, we’re only scratching the surface of agentic AI — consider fellow Club name Salesforce’s buzzy new offering Agentforce — and seeing early glimpses of physical AI through Google-owned Waymo’s robotaxis , Tesla’s advanced driver assistance system and occasional behind-the-scenes looks at so-called humanoid robots made by the likes of Boston Dynamics and other startups. Still, it’s clear that despite Nvidia’s incredible growth since the launch of ChatGPT sparked a gold rush for its market-leading data center chips, its best days are ahead of it. Nvidia will realize that potential because it not only provides the hardware needed for agentic and physical AI systems, but also the accompanying software that runs atop its chips. While these areas are long-term opportunities, Huang also provided ample reason to be optimistic on the near term. That includes taking an axe to the price of high-quality performance in the video game market, which is where Nvidia began selling its innovative graphics processing units (GPUs) in the 1990s before finding a home in the world of AI. Specifically, Huang announced that Nvidia applied its next-generation data center chip architecture, known as Blackwell, into its latest video game GPU. Nvidia’s new entry-level gaming GPU, the Blackwell-based RTX 5070, will offer equivalent performance to its prior generation’s highest-end offering, the RTX 4090, with half the power consumption. That can translate into a roughly 40% improvement in battery life for gaming laptops that run the chips. Utilizing AI-enabled image rendering technology invented by Nvidia, the 5070 chip will cost only $549 — the 4090, by contrast, is sold at $1,599. NVDA .SPX 1Y mountain Nvidia’s stock performance over the past 12 months compared with the S & P 500. Nvidia is applying that value dynamic to more than just the consumer-oriented gaming chip. It’s happening inside the data center, which is on track to account for roughly 88% of companywide revenue in the 12 months ending in January, according to FactSet; gaming is projected to be about 9%. Huang confirmed that Blackwell-based data center chips are now in full production while arguing that the rationale for deep-pocketed customers such as Club holding Microsoft to upgrade to Nvidia’s latest hardware remains fully intact. That rationale is centered on the concept of scaling laws and has become somewhat of a question mark for investors in recent months. Essentially, scaling laws say that the more compute power provided to AI models, the more effective they become. The way Huang tells it, it would be almost financially irresponsible not to upgrade — in contrast to concerns among some investors that tech giants are overspending on AI infrastructure. “The scaling laws are driving computing so hard that this level of computation — Blackwell over our last generation — improves the performance per watt by a factor of four [and] performance per dollar by a factor three. That basically says that, in one generation, we reduced the cost of training these by a factor of three, or if you increase the size of your model by a factor three, it’s about the same cost.” In discussing Nvidia’s next frontiers, Huang focused much of his commentary on the self-driving space. “I predict that this will likely be the first multi-trillion dollar robotics industry,” he said. The CEO highlighted Nvidia’s new partnership with Toyota, noting that the world’s largest automaker by sales will use the chipmaker’s autonomous driving platform, dubbed Drive AGX, for its next-generation vehicles. He said Nvidia is expecting around $5 billion in automotive-related revenues in its upcoming fiscal year, up from about $4 billion this year. Keep in mind: Those figures likely include additional sales to the auto industry beyond what is officially recorded in the company’s automotive segment, which primarily covers revenue from its self-driving platforms. Most likely, those additional sales show up within the data center segment. Huang also showed off a new model called Cosmos that is designed to help train AI systems for autonomous vehicles and robots, which are seeing increased adoption in places like warehouses and factories. Rather than needing mountains of text data like the system that underpins ChatGPT, systems for physical AI need to be trained on videos of humans walking, hands moving and other things that occur in nature. Nvidia created Cosmos to make that training process more cost effective, Huang said. “The ChatGPT moment for general robotics is just around the corner,” he argued. The software that Huang discussed also extends to agentic AI with the creation of “Nvidia AI Blueprints,” a suite of tools for developers to build custom agents for their own companies. While seemingly incremental right now, this fits into the larger narrative of Nvidia bolstering its high-margin software business to help smooth out some of the inherent lumpiness with hardware sales. In another software nugget, Nvidia showed its respect to the work of fellow Club holding Meta Platforms , which created the popular open-source Llama large language models, or LLMs. Building on that success, Nvidia announced the Llama Nemotron family of LLMs to help businesses build and implement various agentic AI applications. Bottom line The selling in Nvidia shares Tuesday does not impact our reaction to Huang’s presentation on Monday night. It met, if not exceeded, our expectations. Nvidia’s gaming chips are now a better value than ever, and Blackwell is in full production and ready to drive another year of data center growth as generative AI use cases continue to expand and enterprises begin testing ways to use AI agents. Meanwhile, the foundation for physical AI is being set, and we appear to be on the verge of significant growth in the automotive space. The company has shown a desire to bring AI to all industries. Perhaps more important for the long-term investor, Nvidia is clearly working off of a higher-level roadmap for artificial intelligence that will drive the direction of offerings in the years to come. Autonomous vehicles show how important that higher-level north star is. That is an industry where Nvidia’s various hardware and software offerings work together to create a flywheel of continuous learning and improvement for these advanced operating systems — it’s hard to imagine Nvidia’s auto revenues going anywhere but up from here. Huang’s keynote illustrates why we can confidently reiterate our view that over the long term, investors will be best served by owning a core position in Nvidia, rather than trying to trade in and out of the stock. While there will surely be pullbacks in the stock — just like we’re seeing Tuesday, or saw at various points last year — what’s clear is the world needs significantly more computing power than it currently has. That not only requires more powerful hardware but more capable software as well. As we see with the new generation of RTX gaming chips, the smart pairing of the two leads to better performance, efficiency, and value for customers. That matters a great deal considering one of the biggest questions lingering over the AI boom is figuring out a way to power all the data centers being built. We plan to stick with Nvidia while we await the answer. (Jim Cramer’s Charitable Trust is long NVDA, GOOGL and META. See here for a full list of the stocks.) As a subscriber to the CNBC Investing Club with Jim Cramer, you will receive a trade alert before Jim makes a trade. Jim waits 45 minutes after sending a trade alert before buying or selling a stock in his charitable trust’s portfolio. If Jim has talked about a stock on CNBC TV, he waits 72 hours after issuing the trade alert before executing the trade. THE ABOVE INVESTING CLUB INFORMATION IS SUBJECT TO OUR TERMS AND CONDITIONS AND PRIVACY POLICY , TOGETHER WITH OUR DISCLAIMER . NO FIDUCIARY OBLIGATION OR DUTY EXISTS, OR IS CREATED, BY VIRTUE OF YOUR RECEIPT OF ANY INFORMATION PROVIDED IN CONNECTION WITH THE INVESTING CLUB. NO SPECIFIC OUTCOME OR PROFIT IS GUARANTEED.

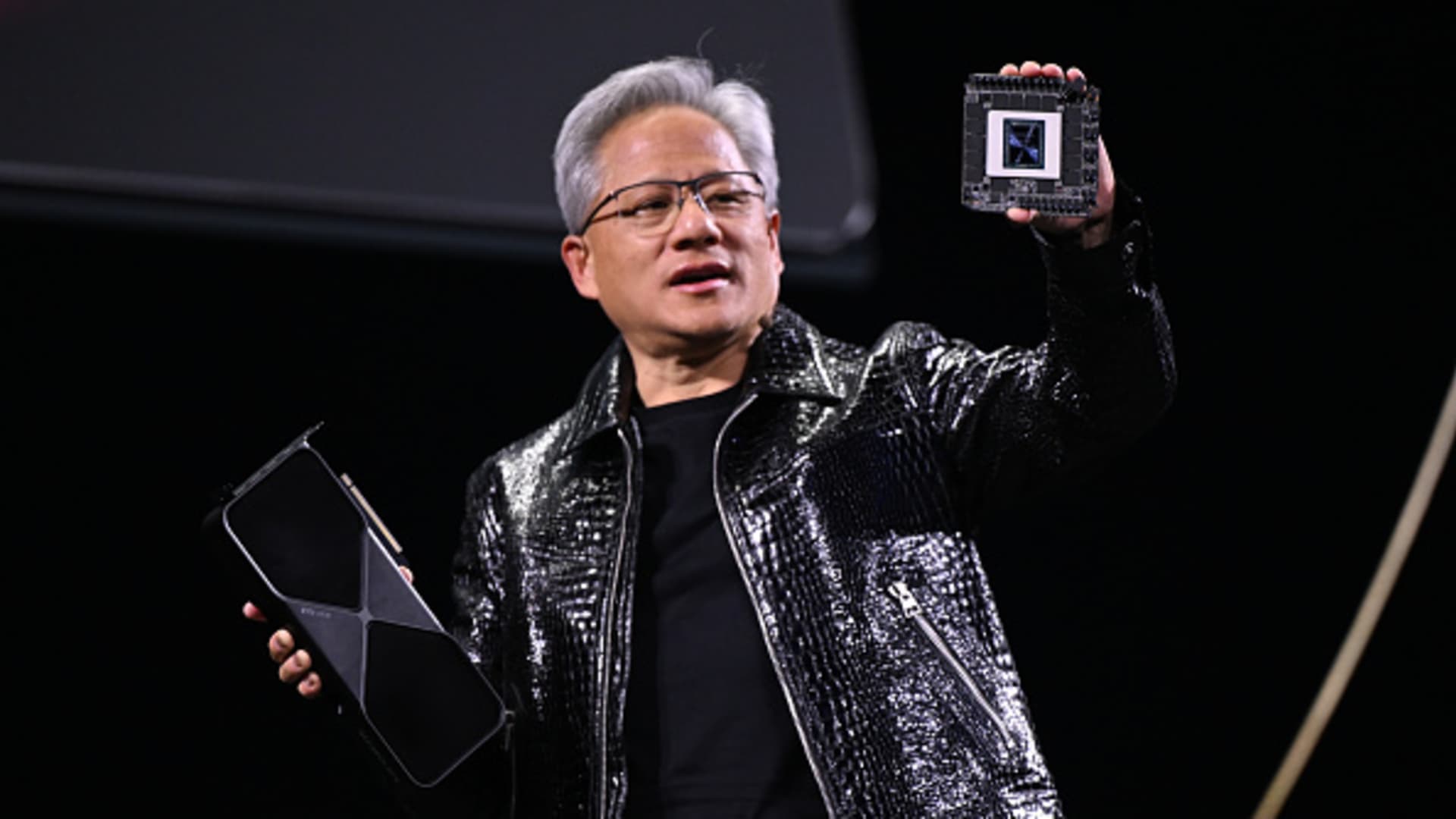

Nvidia CEO Jensen Huang delivers a keynote address at the Consumer Electronics Show (CES) 2025, showcasing the company’s latest innovations in Las Vegas, Nevada, USA, on January 6, 2025.

Artur Widak | Anadolu | Getty Images

AI chip giant Nvidia’s blistering growth in recent years has sprung from the data center. On Monday night, CEO Jensen Huang made the case that Nvidia’s next wave of expansion will encompass much more — stretching from the warehouse floor to city streets and beyond.